Just-the-Biz Limited

Database Services

Highlights

Over the years, everyone’s career will contain certain accomplishments, certain creations or maybe products that will form the highlight of their efforts. Here are a few of those things that have featured in my story so far, and in which I take some degree of pride. Unfortunately for me, most of my highlights would probably only be of interest to people in the IT business.

This may be the only forum in which I can possibly discuss these highlights, as when at home I am enthusiastically explaining, the latest marvel of code I have crafted or diving deep into the intricacies of a new idea I’ve just had, my wife, with her ever-patient smile, often reminds me that while my technical triumphs might rival the engineering of the pyramids, what she really wants to know is whether I've remembered to take out the trash. It’s her gentle way of saying that while my coding might be elegant, our lives still run on good old-fashioned practicality. And usually, it also prompts me to run outside - and take out the trash.

British Telecommunications plc

Oracle DBA and Developer

XML Parser for the Datawarehouse Volume processing.

Here, were my thoughts on speeding up an internal business process that had to read through millions of XML documents daily. The definition of the XML document was well understood and published (i.e. it had an XSD). The challenge was to process XML as fast as possible. This was a side-line project and was more for intellectual challenge than anything else. It was my aim to pre-process the XSD document into a set of code modules. The code would present a number of C functions that would be used to ‘consume’ characters read from the incoming XML document. A spreadsheet provided the XML parsing functional flow and each incoming character would be used to lookup, the function to be used to consume the character (based on its value). This ‘program’ would be saved in an 2D array in C, and each incoming character could be used to ‘lookup’ the function that should be used to process it (or to consume it). It’s the ultimate state machine in program form. Each incoming character could alter the state that the machine moved to next, so that the next character could be properly consumed with the correct function.

Yes, there was a bit of supportive coding around this, but, the solution itself meant the consumption of characters could run as fast as a function pointer could be pulled from memory and then executed, something that C happens to be really good at doing. This part actually resolved to a very small piece of code. When all supportive functions were created and present, the consumption of an XML document could be achieved simply by executing the following code..

#include "globals.h"

#include "acts.h"

#define BUFFERSIZE 10485760

unsigned char buffer[BUFFERSIZE];

int ibufptr, isize;

unsigned long lbytes_processed=0;

int main()

{

init_actions();

do{

lbytes_processed+=isize=fread(buffer,1,BUFFERSIZE,stdin);

for(ibufptr=0; ibufptr<isize; (*f_acts[ictx=ctx[c=buffer[ibufptr++]]])());

} while(!feof(stdin));

}

When executed, the process was capable of opening multiple parallel streams of SQL*Loader, separate sub-documents from the XML document and stream different sections of the document into different SQL*Loader channels for loading directly into the database.

I stopped working on this when the other team achieved a processing rate of 70 records per second, this rate had been set by the Business as the lower requirement. Since the business was not interested in breaking processing-speed-records I stopped developing this solution. But, I had demonstrated that my solution worked at: 700 records per second.

Just-the-Biz Limited

SkiMax iOS Application, Front and Backend Code for displaying Piste Maps

This part of my SkiMax App relates to the collection, preparation, and loading of Piste-Map data from the Open Snow Map project into a back-end database.

For this I divided the world into my own bespoke ‘tiles’ that represent regions of the map. Each tile is saved into a mySQL database table and contains Vector Data on Pistes, Cable Cars, Chairlifts etc, (all the wonderful lift types you find at a ski resort).

This information is pulled from back-end servers by the application and is then rendered as polygons on the 3D map terrain of the Apple Maps.

There is no API in Apple maps that deals with the animation of objects on the map. Animation requirements in my case involve the creation of lifts and gondolas that have the appearance of moving in the direction of the lift. This is achieved by defining multiple lines that overlap with very thick lines for which a particular dot-dash profile is defined. By varying the starting location profile of these dotted lines, the lifts on the map appear to move.

The management of the vector tiles is handled with a Objective C class that can send bulk-sets of tile codes to the server for a rapid status check on their current state. If a tile’s release state is later than the one held locally, then an update is made to retrieve the latest vector data for that tile…

The whole process is automated at the back-end and has been running for many years. It took me two weeks to integrate piste-map data into my Skiing app and I am kind of proud of how it works and has continued to work over the years.

Hays plc

Contract Oracle Development DBA

Use of Functional SQL

I have loved working contract for Hays plc.

They operate in many countries and use Oracle for many of the Business critical database systems. During my work here, I have had cause to write many SQL reports, sometimes in the form of views that can then be used by the application to render certain information that can be quite complex to understand. I am passionate about the integrity of the data, and I have provided many reviews of code submitted, ‘to be executed against production’. Sometimes, the complexity of a SQL statement can get out of hand. Anyone who has worked with denormalised databases will understand this. I have found that constructing ‘functional’ SQL can have a double payback when used to construct complex queries.

Functional SQL is my term for SQL that comprises normal SQL and user-defined functions, that are normally applied in the ‘where-clause' to simplify a query.

Suppose you have a core table, the ENTITY_TABLE that must be consulted to verify that every entity you use is active (status = ‘A’). Now let’s say you have five tables that hold different entities, and that you have a query that has to select from each of these, but, only if all entities are active.

SELECT

cand.full_name,

job.job_title,

cont.full_name,

org.client_name,

app.application_date

FROM

main_candidate cand,

main_contact cont,

main_job job,

main_organisation org,

main_applications app,

link_applicaitons link,

entity_table ent_cand,

entity_table ent_cont,

entity_table ent_job,

entity_table ent_org,

entity_table ent_app

WHERE

cand.reference = link.candidate AND

cont.reference = link.contact AND

job.reference = link.job AND

org.reference = link.organisation AND

app.reference = link.application AND

cand.reference = ent_cand.entity_id AND ent_cand.status = 'A’ AND

cont.reference = ent_cont.entity_id AND ent_cont.status = 'A’ AND

job.reference = ent_job.entity_id AND ent_job.status = 'A’ AND

org.reference = ent_org.entity_id AND ent_org.status = 'A’ AND

app.reference = ent_app.entity_id AND ent_app.status = 'A'

/

SELECT

cand.full_name,

job.job_title,

cont.full_name,

org.client_name,

app.application_date

FROM

main_candidate cand,

main_contact cont,

main_job job,

main_organisation org,

main_applications app,

link_applicaitons link

WHERE

cand.reference = link.candidate AND

cont.reference = link.contact AND

job.reference = link.job AND

org.reference = link.organisation AND

app.reference = link.application AND

all_entity_active(cand.reference, cont.reference, job.reference, org.reference, app.reference)

/

With the LHS the SQL is written in full and requires that each entity, to be tested, is linked to another copy of the entity table to validate its status. Queries like this are very common. Now suppose you create a small function: entity_active (that checks the status of the one entity_id passed as a parameter), as follows:

CREATE OR REPLACE FUNCTION entity_active(

pin_entity_id IN NUMBER

) RETURN BOOLEAN

IS

b_return_state BOOLEAN;

BEGIN

b_return_state := FALSE;

FOR rec IN (SELECT 1 FROM entity_table WHERE entity_id = pin_entity_id AND status = ‘A’)

LOOP

b_return_state := TRUE;

END LOOP;

RETURN b_return_state;

END;

/

Now create a function that checks five in one go…(this could be overloaded to provide for for checking of different numbers of entities in one go)...

CREATE OR REPLACE FUNCTION all_entity_active(

pin_entity_id_1 IN NUMBER, pin_entity_id_2 IN NUMBER, pin_entity_id_3 IN NUMBER, pin_entity_id_4 IN NUMBER, pin_entity_id_5 IN NUMBER

) RETURN BOOLEAN

IS

BEGIN

RETURN( entity_active(pin_entity_id_1) AND entity_active(pin_entity_id_2) AND entity_active(pin_entity_id_3) AND entity_active(pin_entity_id_4) AND

entity_active(pin_entity_id_5) );

END;

/

By making use of these, 'on the shelf functions', it makes every access to verify an entity’s status much simpler. SQL on the RHS (above) is logically equivalent to the original.

Note on performance.

Of course, operating this across millions of entities would be much slower that writing the SQL in full, and the full query would allow Oracle to plan for the volumes encountered. But, in a scenario where the volumes of checks are small and governed by other more selective query expressions, this method provides a way to greatly simplify the complexity of your queries and enhance the overall quality of your code. For example, once you have such a function, you can then define the behaviour in dealing with NULL values within the function itself, a process that is much more complex in general SQL expressions. And if tackled in the functions, it would affect all queries that use these functions, and only has to be considered once, instead of in every SQL statement that requires this check.

Schemation Technologies

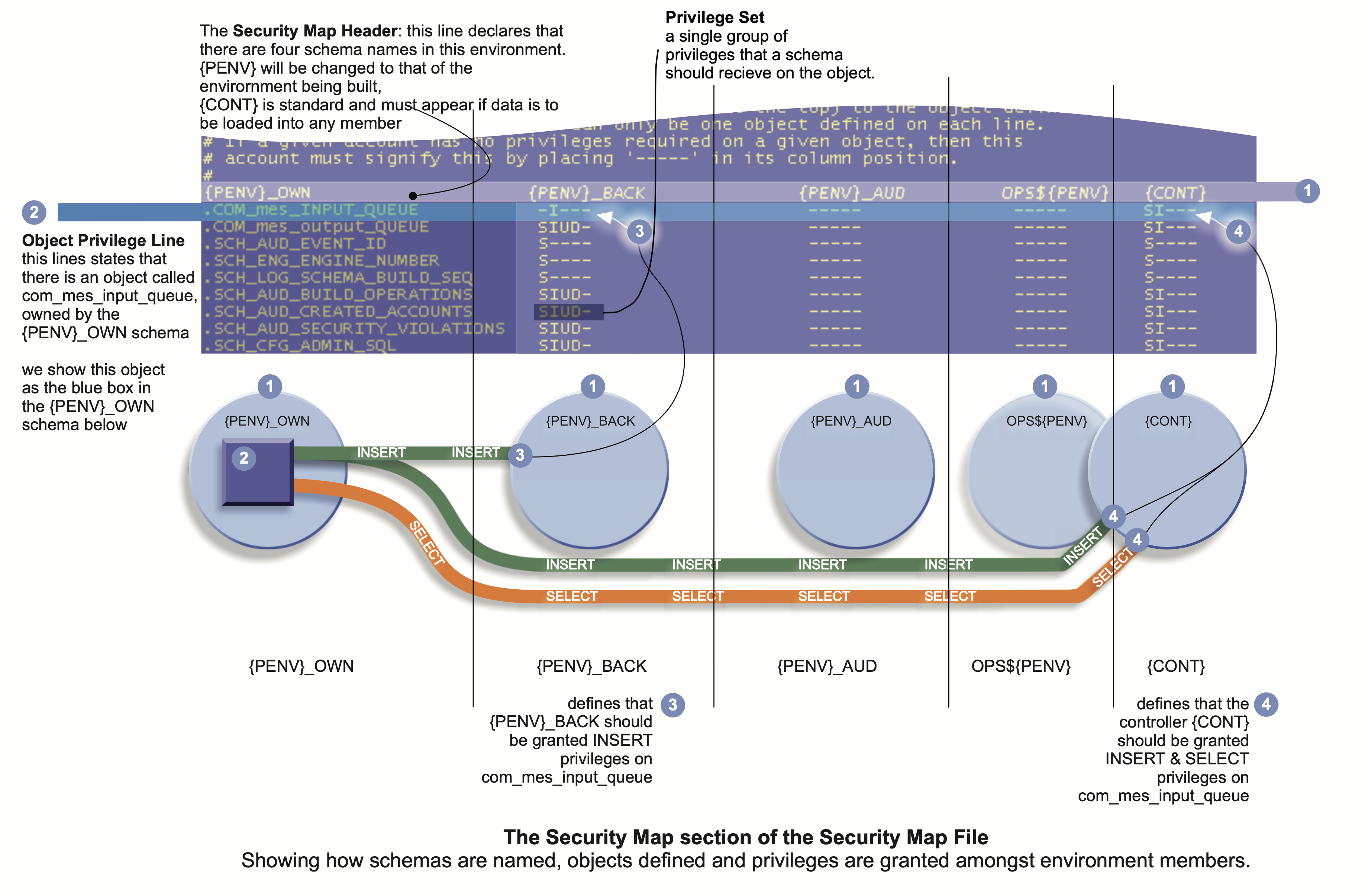

Security Map Design and Implementation

within Schemation Database Environment Manager

Oracle Database Environment Manager for DevOps

When working for the startup-company Schemation Technologies, (founded my myself and three like-minded colleagues), I devised a method of representing the schemas within an database environment, together with the object grants, synonyms and system privileges that make up such an environment. This method is easy to define in a simple text file and is interpreted by our database environment manager (still available in GitHub on request).

Our Database Environment Manager (DEM) provides an off-the-shelf database build process, that is capable of the destruction and complete rebuild of a multi-schema interconnected database environment - with a single invocation method. The product offers modularised DB object builds, and modularised data load.

It is possible, for example, for a developer to run a multi-schema DB environment build, that he/she uses, using a single click in the web-interface. The web-enabled interface allows the developer to chose what type of environment is desired choosing from a list of local repositories. (e.g. Today, do I want my personal DB environment to be a copy of the Australian support system or the UK support system?). The Schemation DEM allows this, all auditable through its web-interface.

For this, I designed and coded all database internal components for this DEM, including coding the parallel running environment-build engines, which communicate through DBMS pipes, with an engine controller, SQL*Loader operations and the main schemation build process, which is written in perl. I wrote an Oracle SQL statement ‘extractor’, which parses files filled with DDL statements into discrete units of execution that the DEM will load and then execute. The data-load component of this process examines the foreign key relationships of the schema and determines the order in which it must load all the table data. It even detects a foreign key referential loop, and temporarily disables a constraint in the loop, before proceeding with the load, re-enabling it on completion.

This is a very mature product and can be used to provide developers with complete control over their multi-schema development database environments. Its embryonic prototype was used in Sapient to provide developer-level control (for their environments) for 80 Java developers, hosted within just one Oracle database.

Though we could not make a commercial success of the company, we are all still very proud of the product we developed.